Apple's ELEGNT solution can benefit from distributed ambient displays, rather than a robot

Apple's Robotic lamp could benefit from delegating its tasks to other ambient displays, while the robot focuses on what's on the desk.

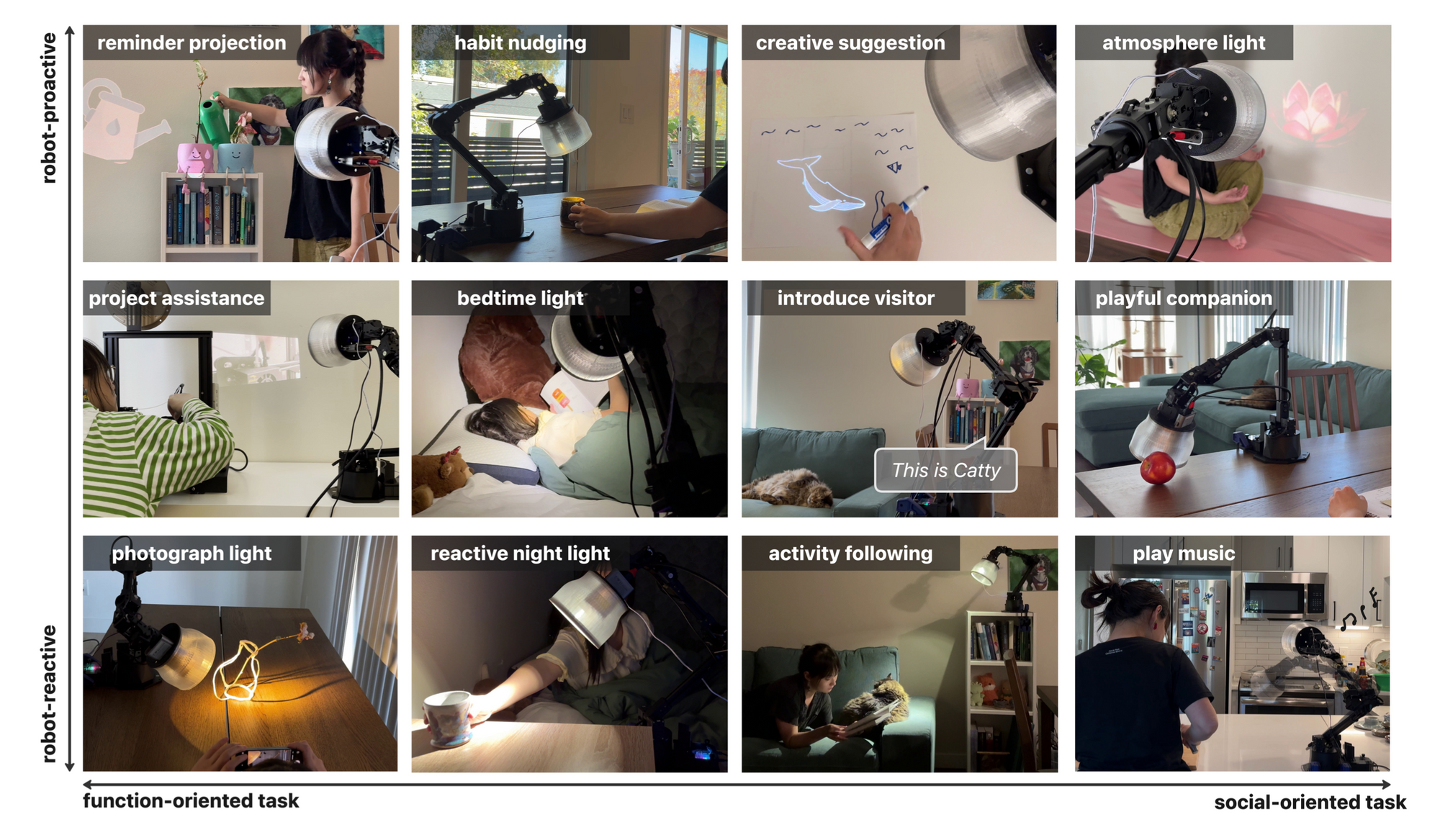

On their Machine Learning Research blog Apple posted a rather remarkable Non-Anthropomorphic robot. It's a robot that accomplishes tasks by following the user around on a robotic arm. It's a super fun exploration and obviously something that's deeply interesting to me. One of the three prototypes was an ambient lamp that acted as a central computing unit)

Anyway, you can read my entire thesis here: It's a similar end "product" approached from a completely different, more human-centric lens of how can we design distraction-free computing for student.

One key difference that I spotted though was how Apple's researchers deal with these kinds of tasks:

It's how the lamp moves around, to help achieve different tasks. While more contained in a single product, this approach has strong privacy implications. You don't want a camera pointed to a lot of parts of your room, much less a robotic camera, that could erroneously point to a direction it should not be pointing towards.

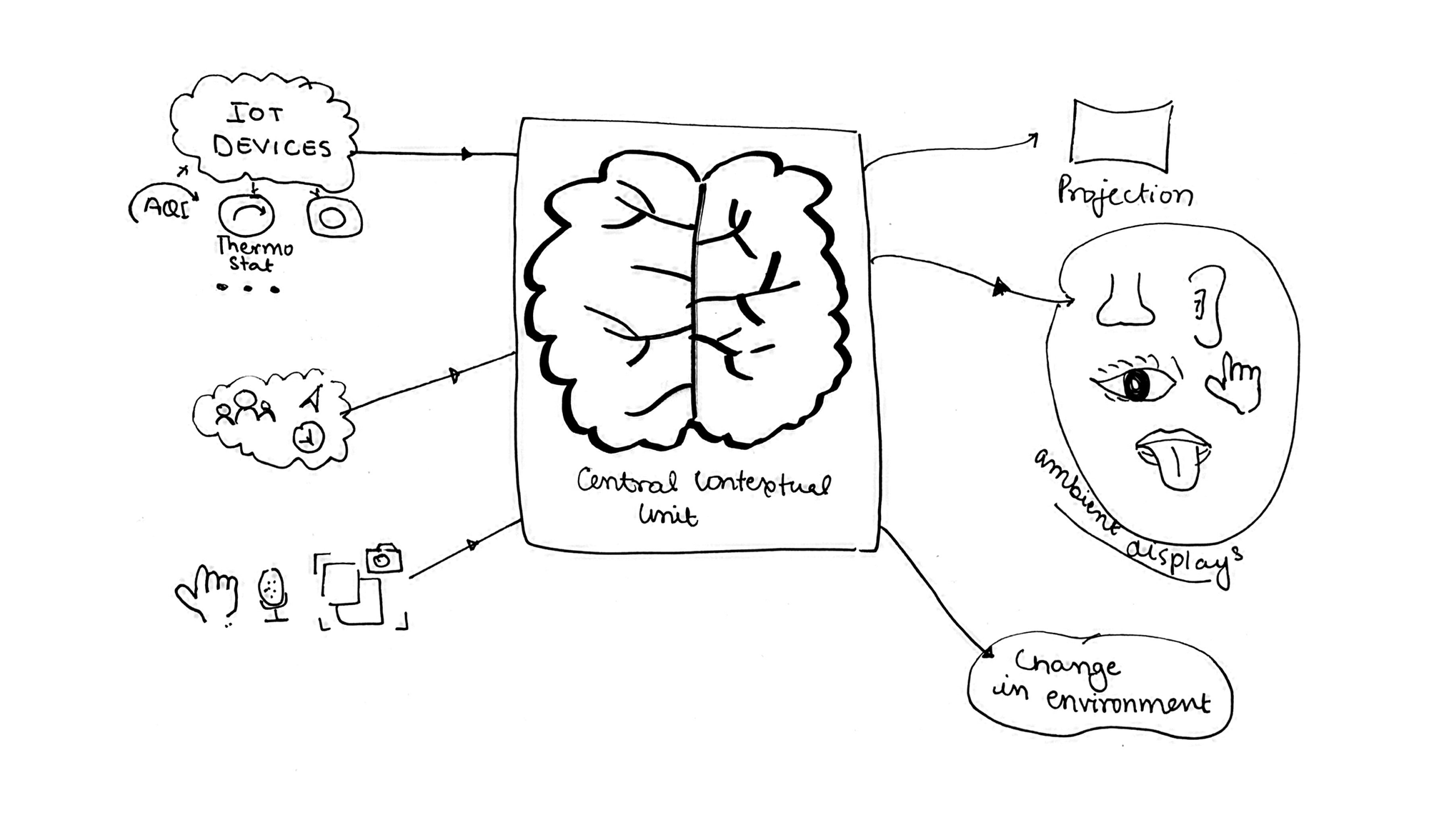

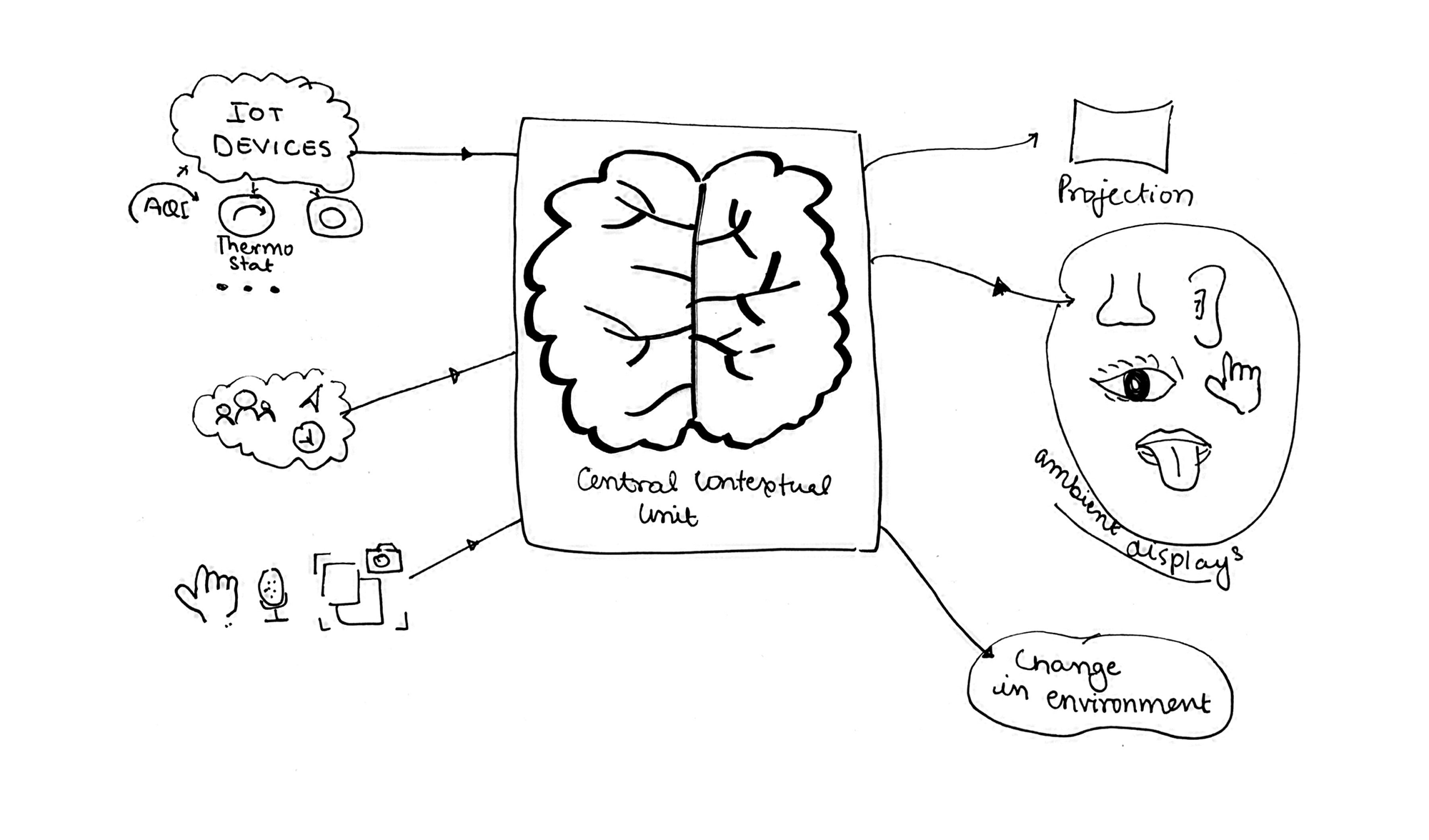

What such a system can benefit from though, is its ability to interface with other ambient objects in a room. And building a spatial world of mini dumb ambient displays that understand the context they are placed in, and inform the user from time to time.

For example, the nudge to water the plants could come from the plant itself, with wind blowing near it, a subtle tap on the plant from an ambient object, or a glow in its direction. Such calm nudges not only get the job done easily, they also create a calmer, in the background information flow that doesn't immediately grab a person's attention.

By distributing the "display of information" not only does the system mitigate privacy concerns such ubiquitous computing systems (ambient displays shouldn't ideally have input sensors, they should simply communicate information over Matter-Thread / BLE ) but also create a more situationally aware system. A plant provides a much better spatial affordance for the user to water the plant rather than a lamp that projects near it.

All in all great to see more research in this direction. I do see embodied cognition to be far more useful than virtual reality when it comes to accomplishing day to day tasks. Such Tangible User Interfaces may still be 5-10 years off.