What the Ai Pin was really good at

I wore the Ai Pin every day for six months. For research. Here’s what it was really good at.

I wore the Ai Pin every day for almost six months, and very early on in those six months, I realized one thing, the Ai Pin wasn’t a complete failure. There were kernels of something truly unique and ambitious there, even if the execution was flawed. What started as an albatross around my neck (or shirt) for spending $700 on a device that barely worked, turned into a tiny research project for my thesis on ambient computing, and where a ubiquitous computer like this one would fit into people’s lives.

What it’s good at

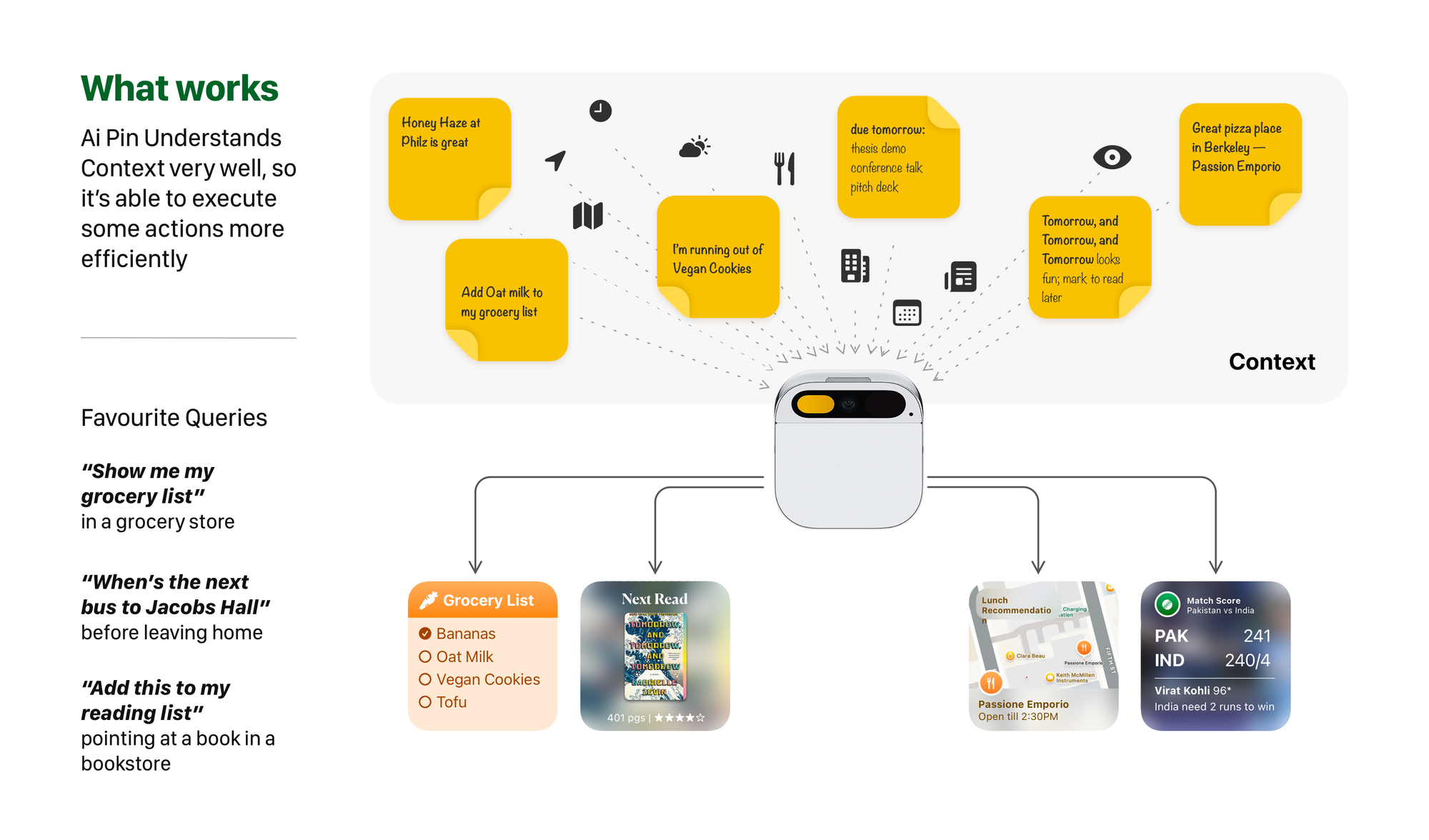

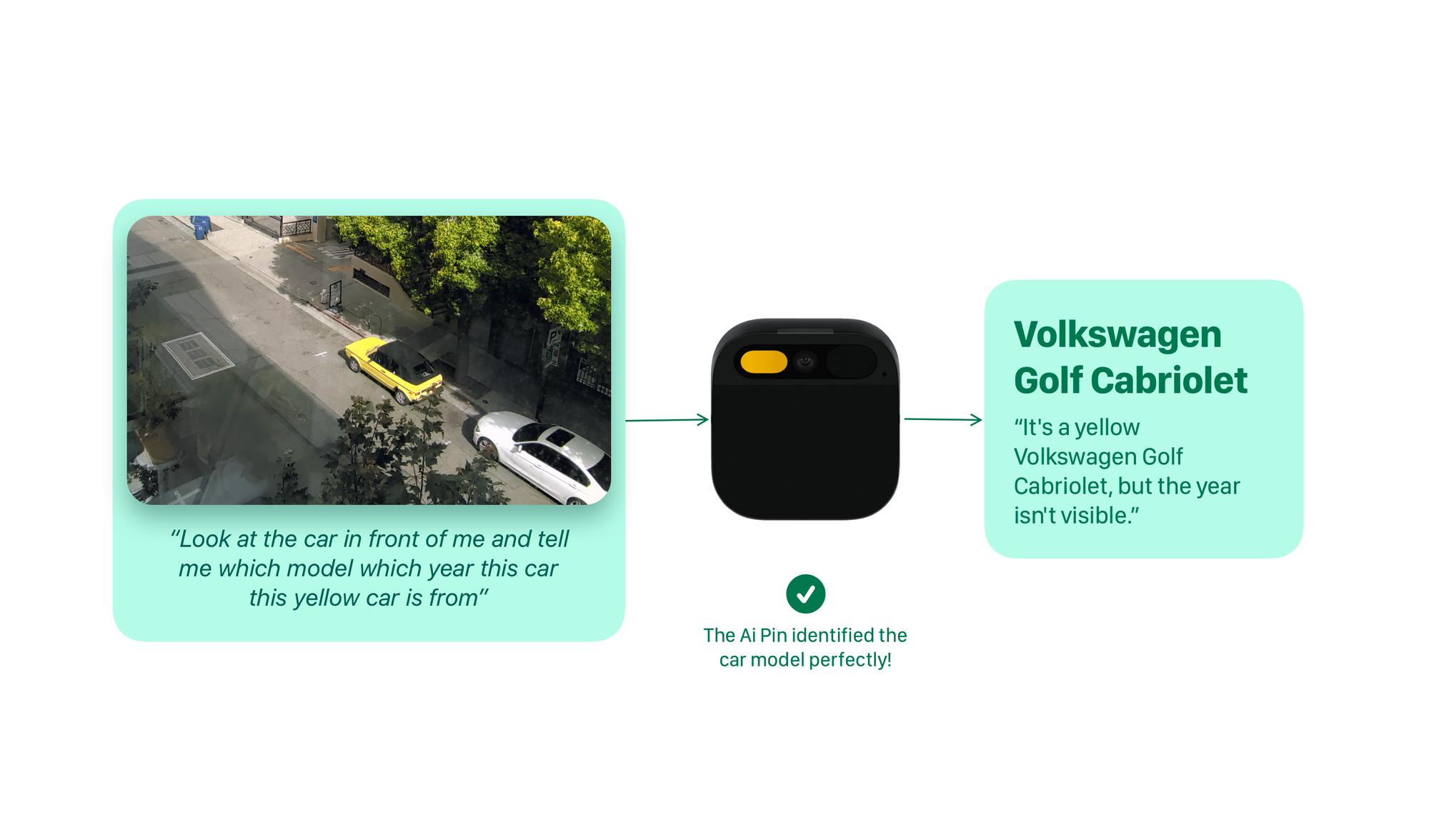

Context

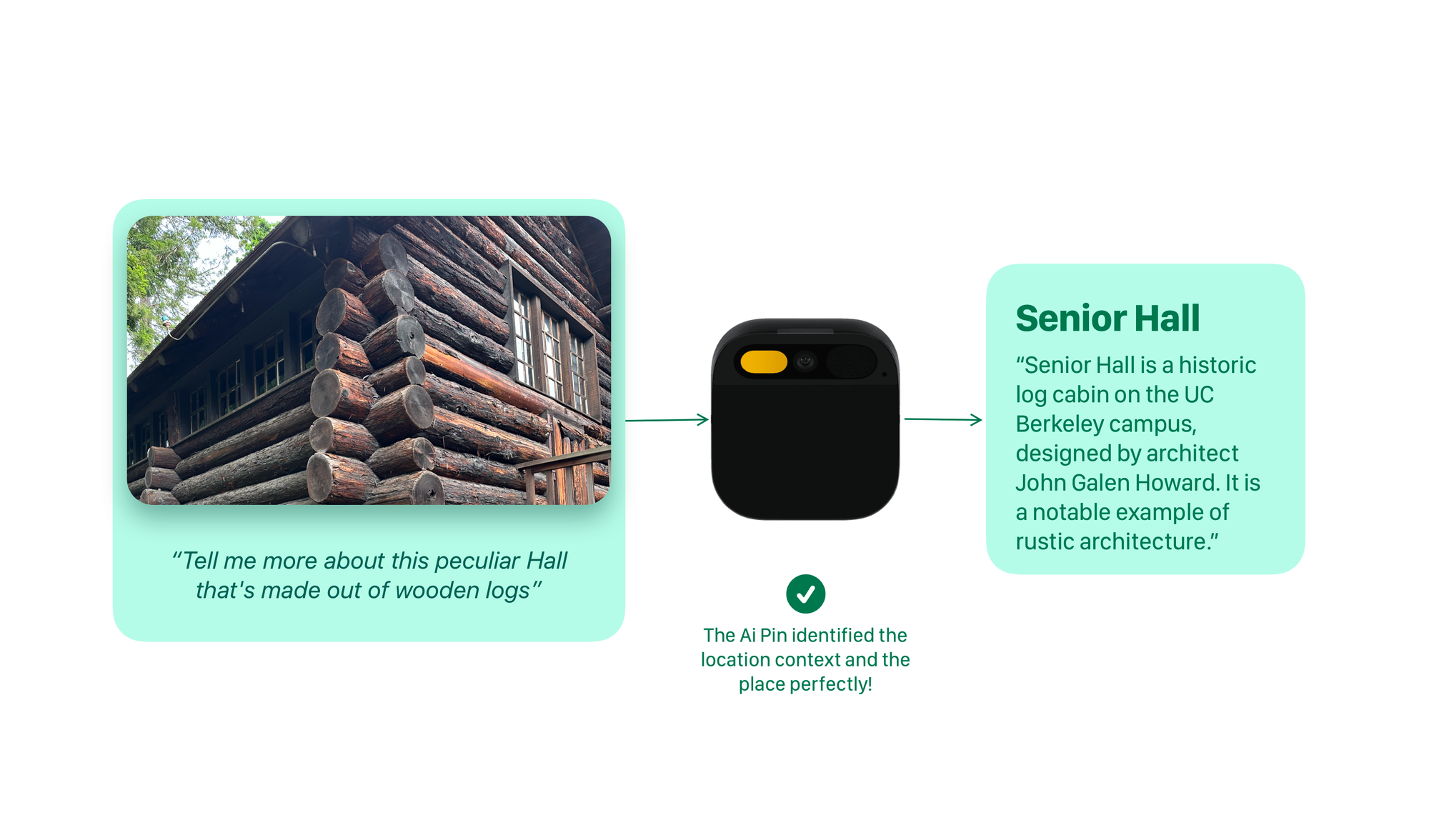

The Ai Pin’s contextual understanding of my environment was pretty impeccable. On one instance I was looking at an old building near the Faculty Hall in Berkeley, and it pretty neatly told me all about it. On another occasion, I asked if the restaurant I was standing outside had any vegan options, and it promptly told me about the options.

Actions that are quick

Because the Ai Pin is so good at its understanding of context, its ability to perform quick actions was so much better*.

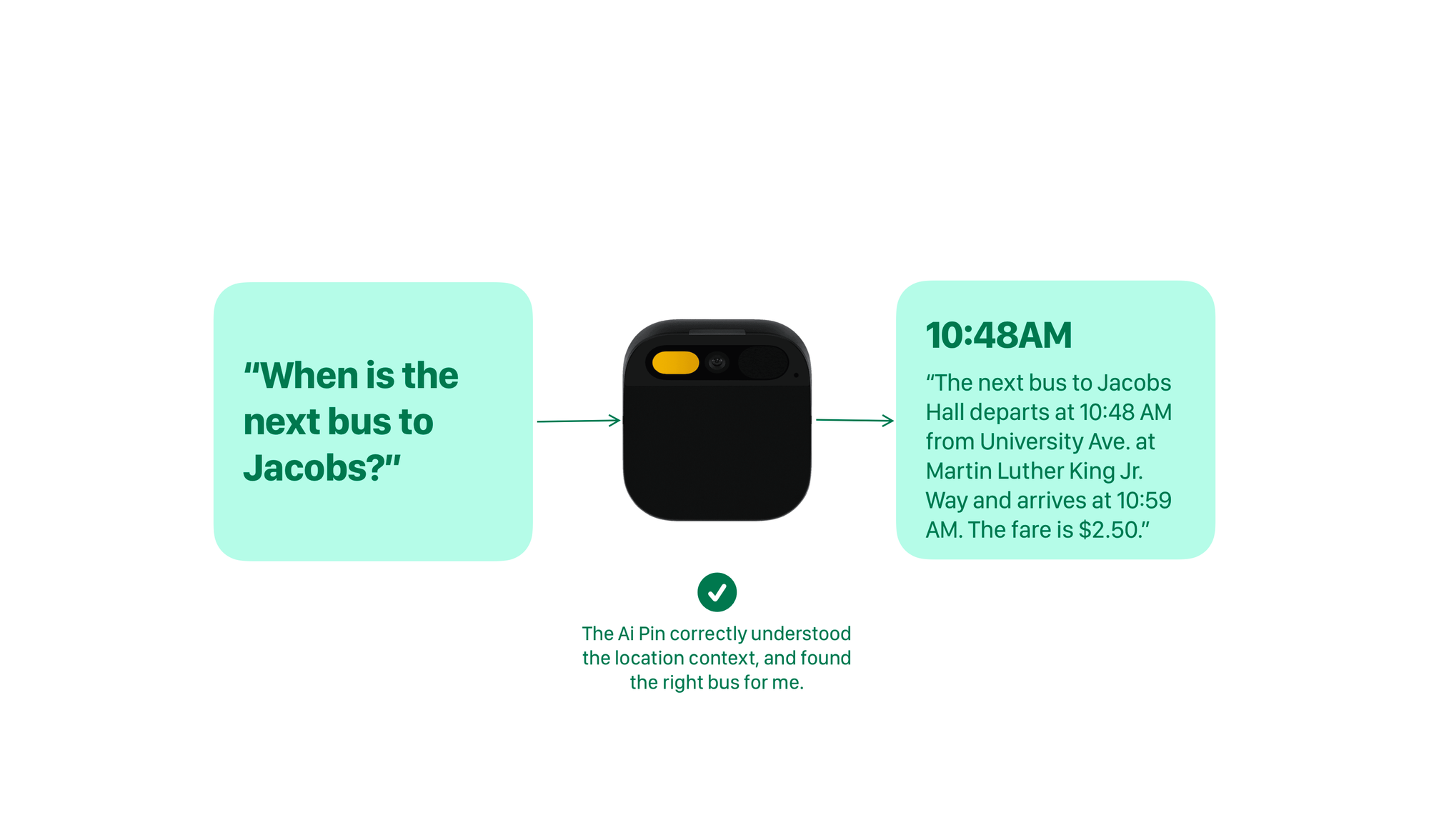

Bus Schedules

Groceries

Pizza Places from notes

Here I am asking it about the Pizza places I've saved in notes. And it's able to surface them.

Reading List

Pizza Proportions

I love this, it’s ability to remember and surface Pizza proportions is a godsend, I just need to tell it to save it to my notes, and then I don’t need to touch my phone when I am kneading the dough.

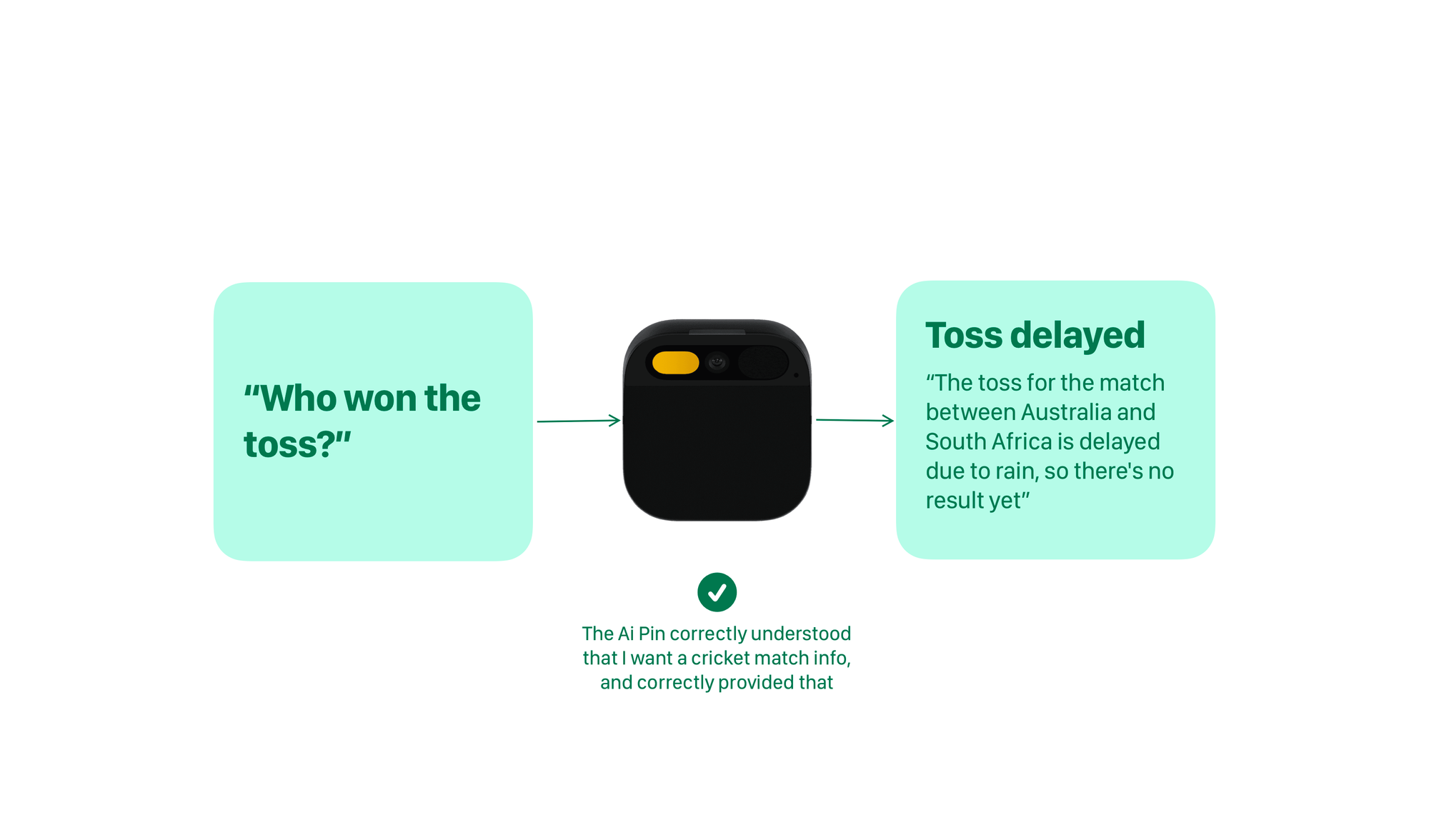

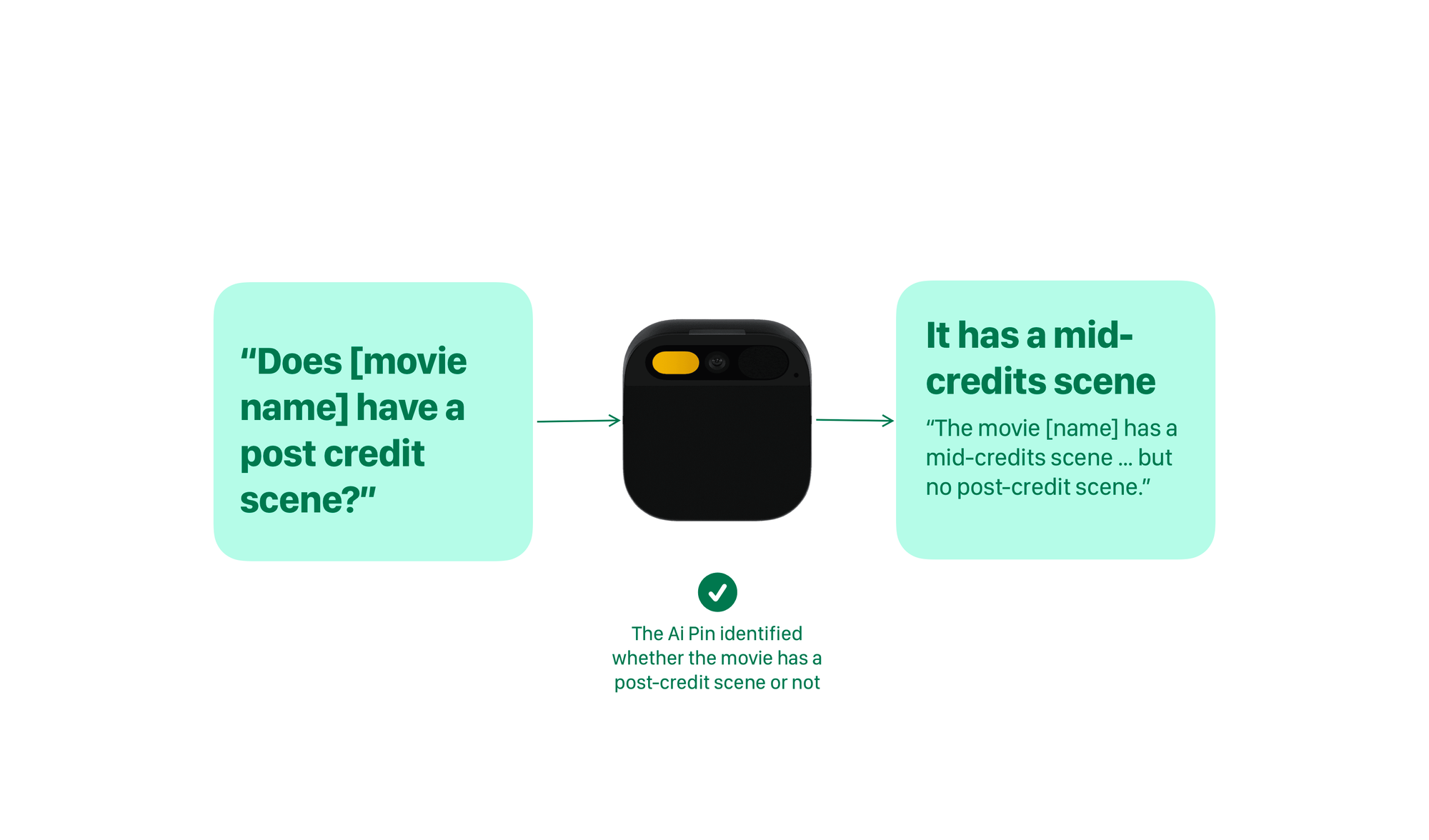

Instant Information

The Ai Pin is also good at providing answers to questions that are rather fuzzy, or would otherwise require some hefty googling.

It understands context and provides information by cutting through layers of interface.

Here’s a common link, it’s “theoretically” great at quick actions, where taking out a phone and interacting with the device is a long process.

Here’s a common link, it’s “theoretically” great at quick actions, where taking out a phone and interacting with the device is a long process.

The idea to use notes as context is ingenious. It’s able to get so much more context and so much more depth to its responses, with as little communication as possible.

Showed improvements where it mattered

To their credit, The Ai Pin in the first couple of months, also showed improvement where it mattered. The first few days of using the Pin were a painful process, it’d get dangerously hot and shut down after minutes of use. By June the issues were mostly ironed out and the device started showing a decent 12 hr battery life. Which is incredible.

Potential as a Home Automation device.

As you can see above, the Ai Pin’s understanding of context, lends it naturally to being able to get input from and control IoT devices and services. I would love to be able to open apartment door with a tap on this device, or instruct it to turn on the lights when I walk into a particular room, being on my chest at all times, actually lends it to some really interesting spatial experiences; sadly the Pin never tapped into any of that. It’s communication with other objects in the physical world remained limited.

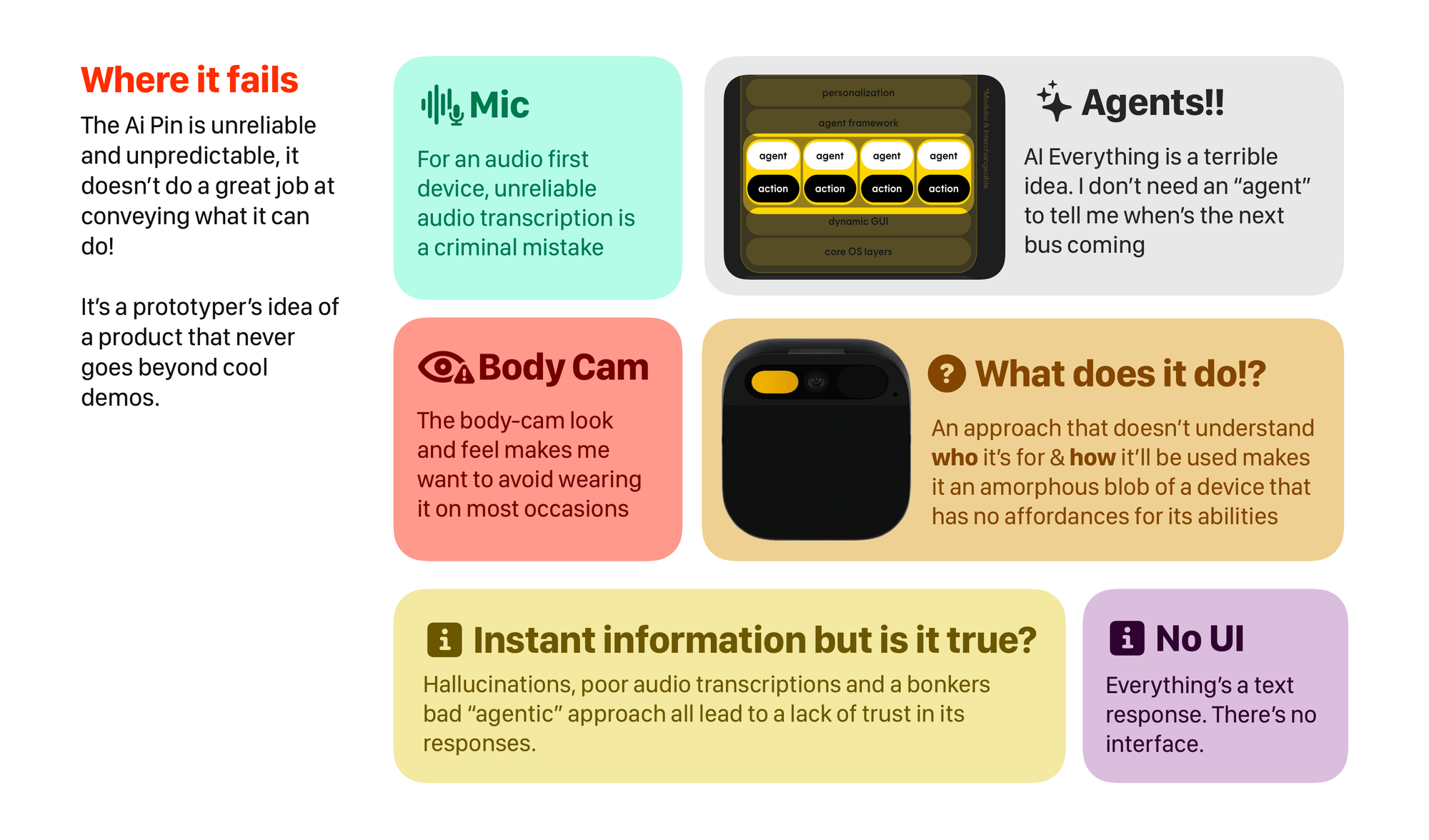

Where it fails

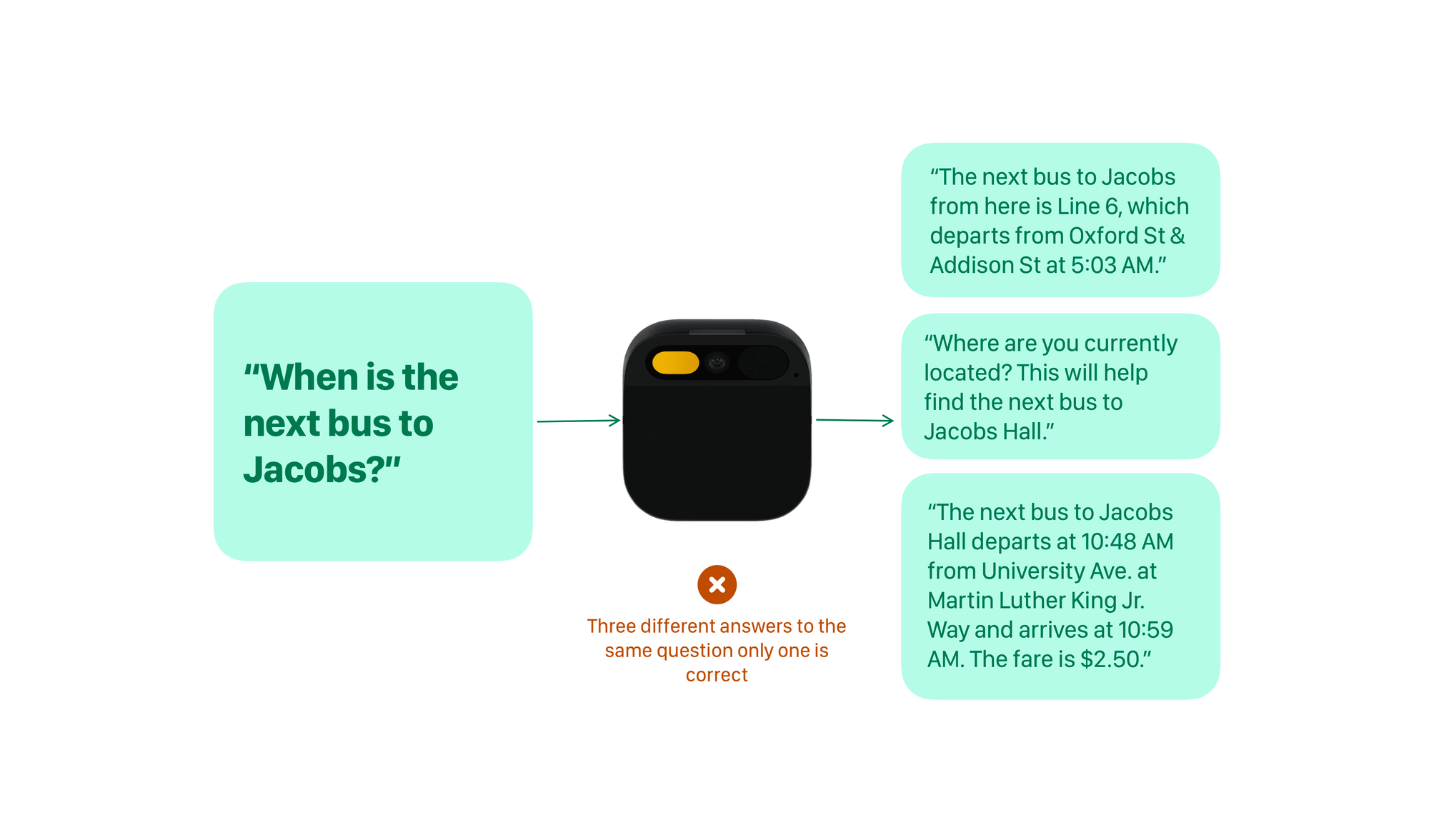

Here’s the thing, all of the above actions that I just showed you, work with the accuracy of 50-80%, some queries that are very direct like grocery lists, are more accurate, others like bus schedules are a hit or miss. Essentially, you can ask the Ai Pin anything, but the probability of it giving you a correct response is 50-50.

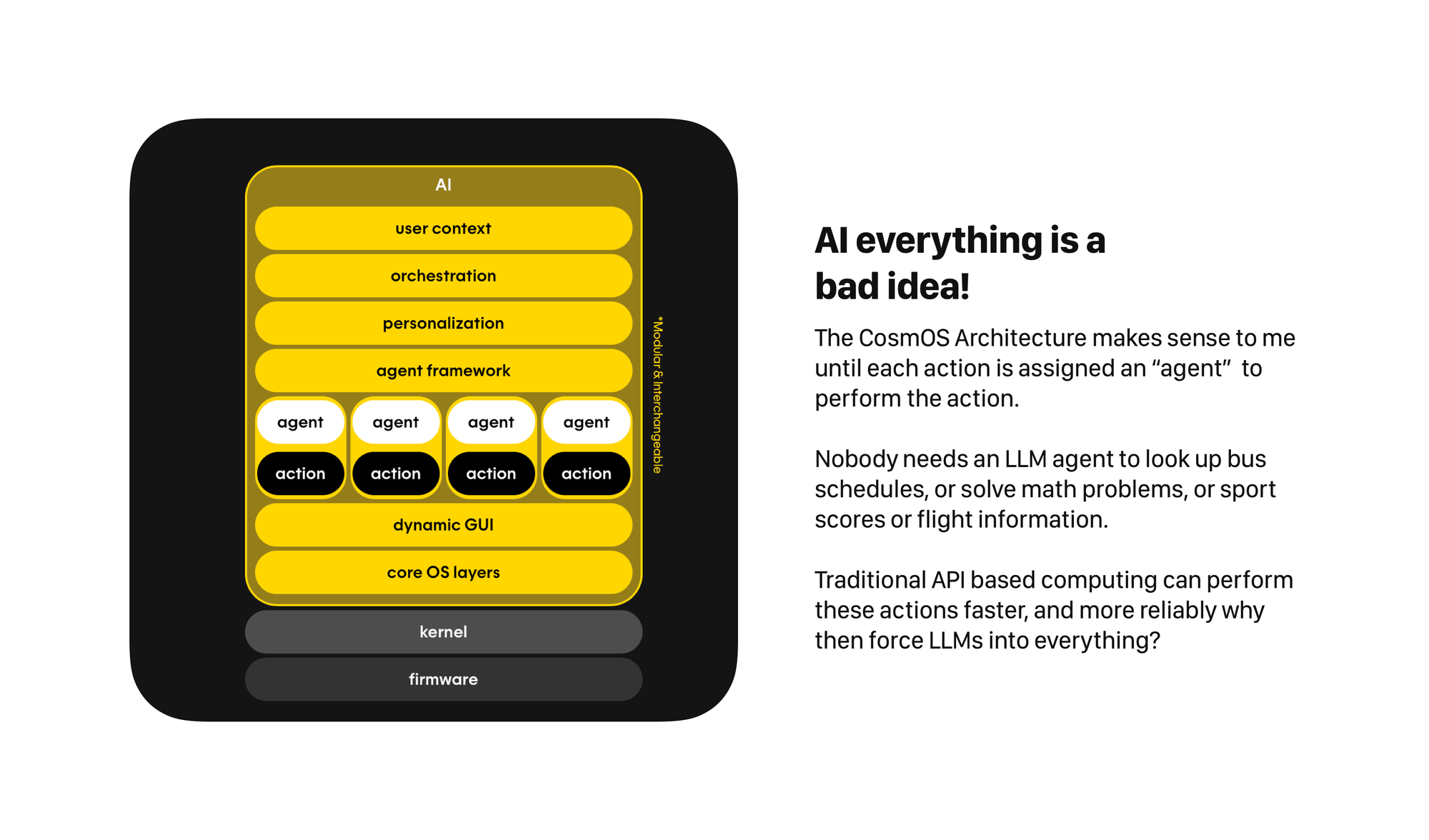

AI everything is a bad strategy - Unreliable mess

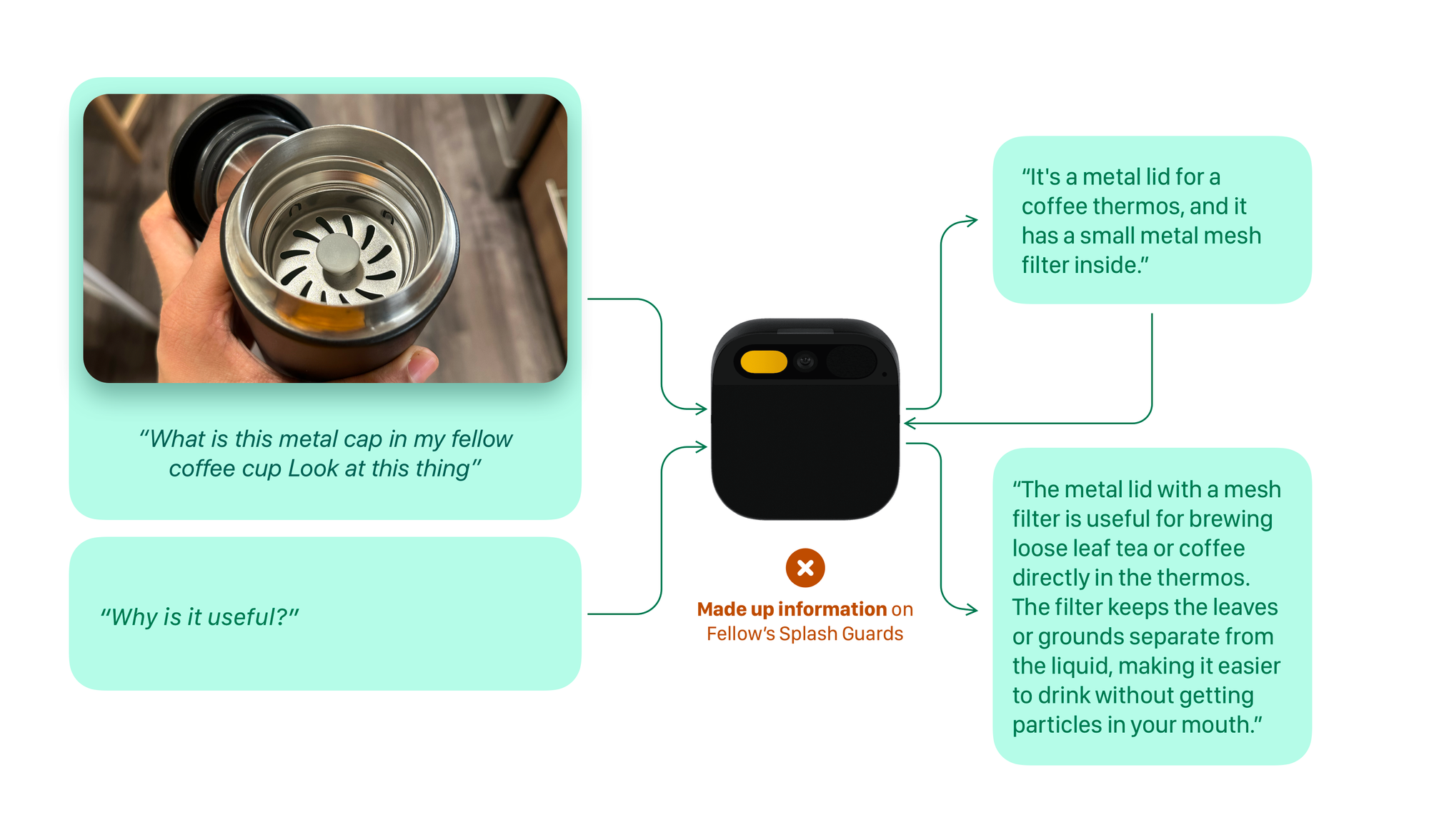

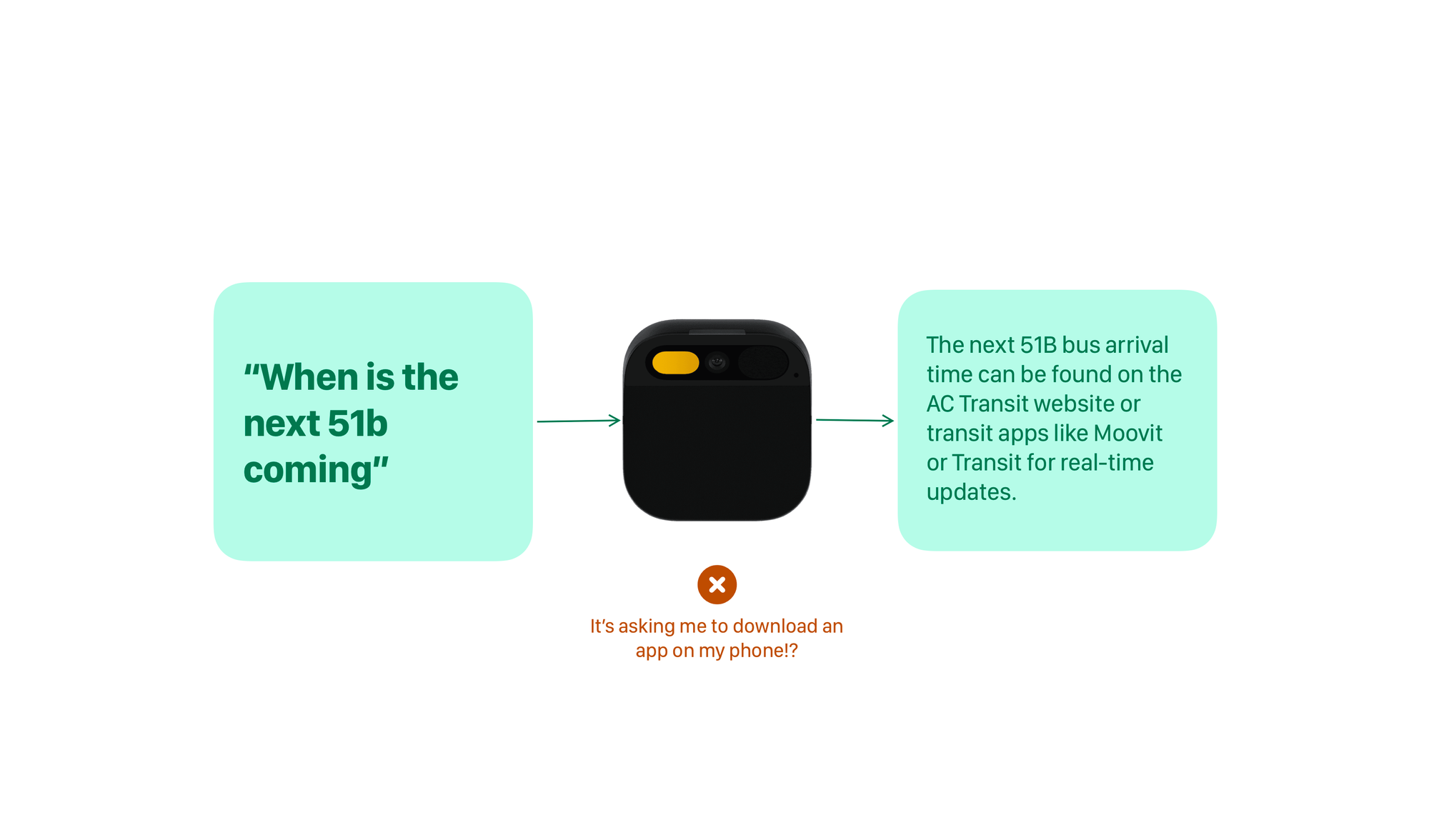

The Ai Pin is extremely unreliable in what it can do, and the problem lies in its over-reliance on LLMs and a particularly wonky google scraping technique when it tries to search the web and give results (which happens more often than not).

I don’t need an LLM agent to tell me about a bus schedule. A simple API call can achieve that!

LLM everything is a bad idea, it’s unreliable, gives false hopes to its users and the hallucinations kill any ability to trust the information it’s providing.

Instant Information but is it reliable?

And that’s the other flaw of the Ai Pin. Because it’s using a mishmash of Google searches and LLM responses to generate an output, it can hallucinate at times. Remember the India vs Pakistan match example? I asked it when it’ll start and its response was it’ll start at 1:00AM in Dubai!?! Which is obviously not true. It’ll start at 1:00AM PST. But these little errors are where It becomes difficult to trust the device as a sources of information.

It’s instant information, yes. But is it reliable? No.

What can it do? (The General Magic Comparison)

Here’s a tip, whenever you hear “general purpose computing device” in a product pitch. Run away. The people who designed it, didn’t actually design for a user, they designed it for a mythical “Joe Sixpack” the general purpose computer user, who must use their “advanced technology in an intuitive way”.

The problem that such a device runs into is it builds out these “cool demos” that show the strengths of the technology, but it forgets to actually design a product. Who is it for? What will the person do with it? What kind of affordances can we provide that person to accomplish the task. None of those questions get answered. Instead, what remains is a pack of demos. That’s what the Ai Pin is.

The UI has no affordance whatsoever to indicate all the cool things I just mentioned that it can do. It can theoretically do anything, but provides the user with affordance for none of them.

Instead the makers came up with a list of six apps that they thought were great examples of using the product. “Phone, Messages, Camera, Music, Timer and Settings”. It’s totally opposite of what I used the device for (or anyone for that matter).

There’s also obviously no structure to what it can do there’s no “here are the three things it’s actually good at” pitch here. It’s exactly like how the General Magic products went. There’s a reason people were perplexed when using General Magic’s product, and there’s a reason people never found a reason to use the Ai Pin.

The interface and interactions were a miss.

When a device so boldly uses voice as its primary mode of input. You’d imagine, understanding different accents would be table stakes, right? (In fact, I’d go so far as to say that in 2025, multiple accents as well as multiple languages are table stakes, pick any AI app, and just see how good it is at picking up on the accent, cultural nuances and multi-lingual conversations)

The quality of Ai Pin’s voice input is barely better than Siri. It constantly misunderstands words and gives erroneous responses. Sure it’s got some smarts in contextually correcting the words it recognizes but they don’t cut the mustard for the experience as a whole. Especially not when it’s the only mode of interaction for most tasks.

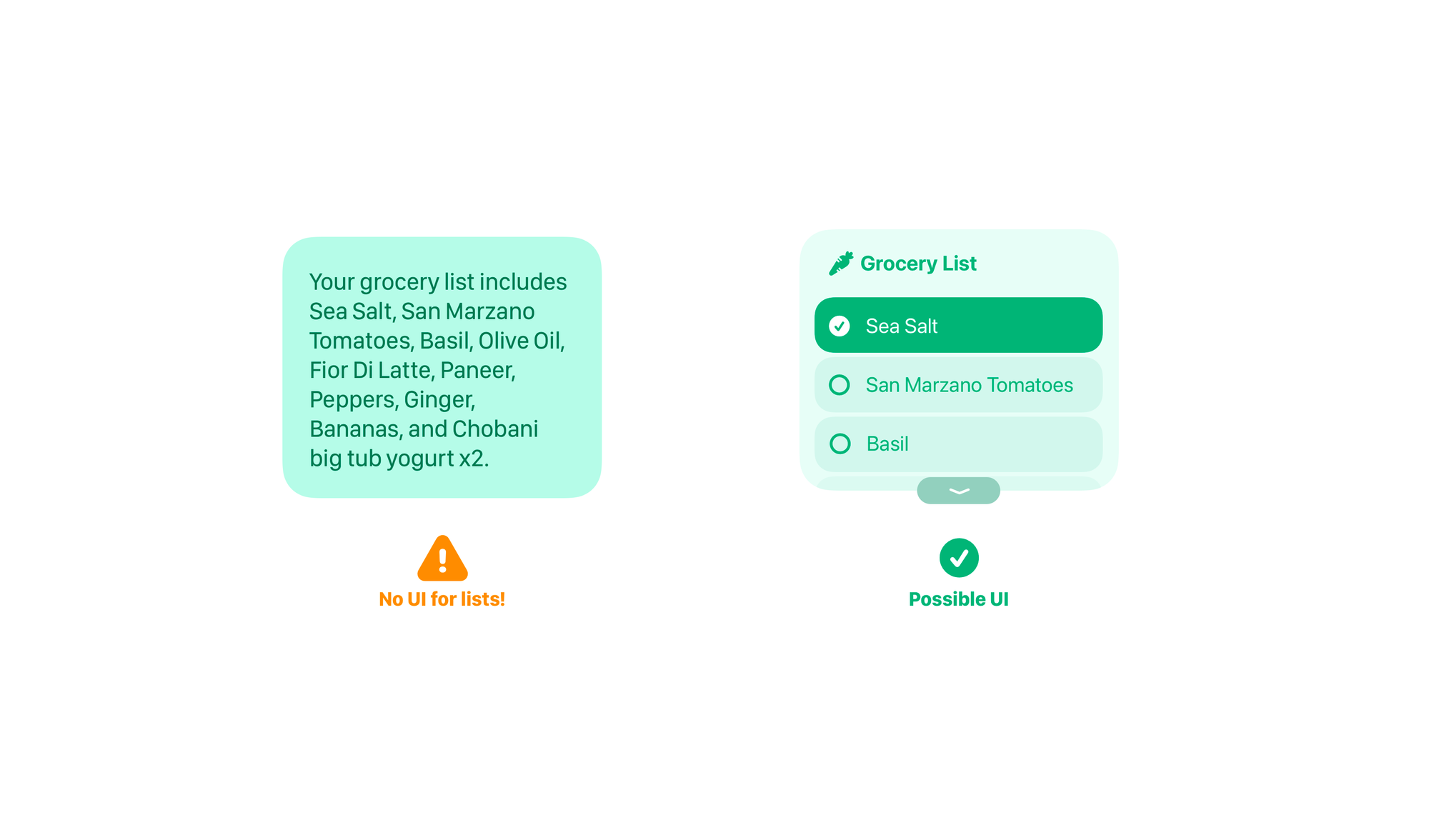

The projected interface I felt wasn’t too bad, not the easiest thing to use, but In general it is ok to interact with. The only problem, most content doesn’t have a UI. Humane had bold claims about creating AI generated interfaces and AI generated apps on the fly, but they never shipped beyond a text response. And as you can imagine, text responses are hardly ever a good way to parse information.

Ofcourse the projector as a display was a half-baked solution as well. It just couldn’t work in day-light, and the limitations of it only working on my palm were finicky to say the least.

Missed Opportunity

Given how powerful its contextual awareness is, it’s baffling to me as to why the Ai Pin doesn’t surface or suggest actions based on a person’s location. If the Pin knows I am at a grocery store, why not show me the grocery list right when I raise my hand? If I’m out for lunch, isn’t it better to show me suggestions for nearby places? If the pin knows I am sitting in my workspace in the morning, it’s only imperative I’d need the day’s todo list and catch up on my mail. Why is that not part of the UI? Why only stick to the standard set of controls?’

The Form Factor

Another much-talked about part is its form. Why a pin, why not glasses. I for one, disagree that we should only look at eye-glasses as a form factor if there are cameras involved.

Ai Pin’s camera position wasn’t awkward, I never had an issue with it capturing input, it almost always saw the image clearly. It was the fact that it felt like a body cam on my chest that made it an uncomfortable social accessory. While most of my testing and research was in a controlled setting of my work studio, I did witness a range of social interaction changes whenever the Ai Pin made an appearance. Some people were thrilled to see something futuristic, Some others were rightfully uncomfortable, a fellow student (who eventually become a good friend) almost heckled me for recording them when they saw me walk-in with the Ai Pin one summer morning. With some others, the dynamics changed, I could feel being judged. Needless to say, I avoided wearing it at a lot of occasions.

Also something like the Pin that’s always in front of you, has a big issue. It’s difficult to hug with the thing on. Clearly, the people who built this didn’t give each other enough hugs to each other. It’s the most awkward thing ever.

As cool as the Vision Demos look, I think the Pin shouldn’t have had a camera. The real benefit of the Pin was its ability to understand context and organize information, but the camera setup not only made the device extremely bulky, it also made it a bad wearable. Take something like the iPod shuffle for example, it’s tiny and something I’m still comfortable wearing. The Ai Pin could’ve been just that! Sadly wearability felt like an afterthought with the device.

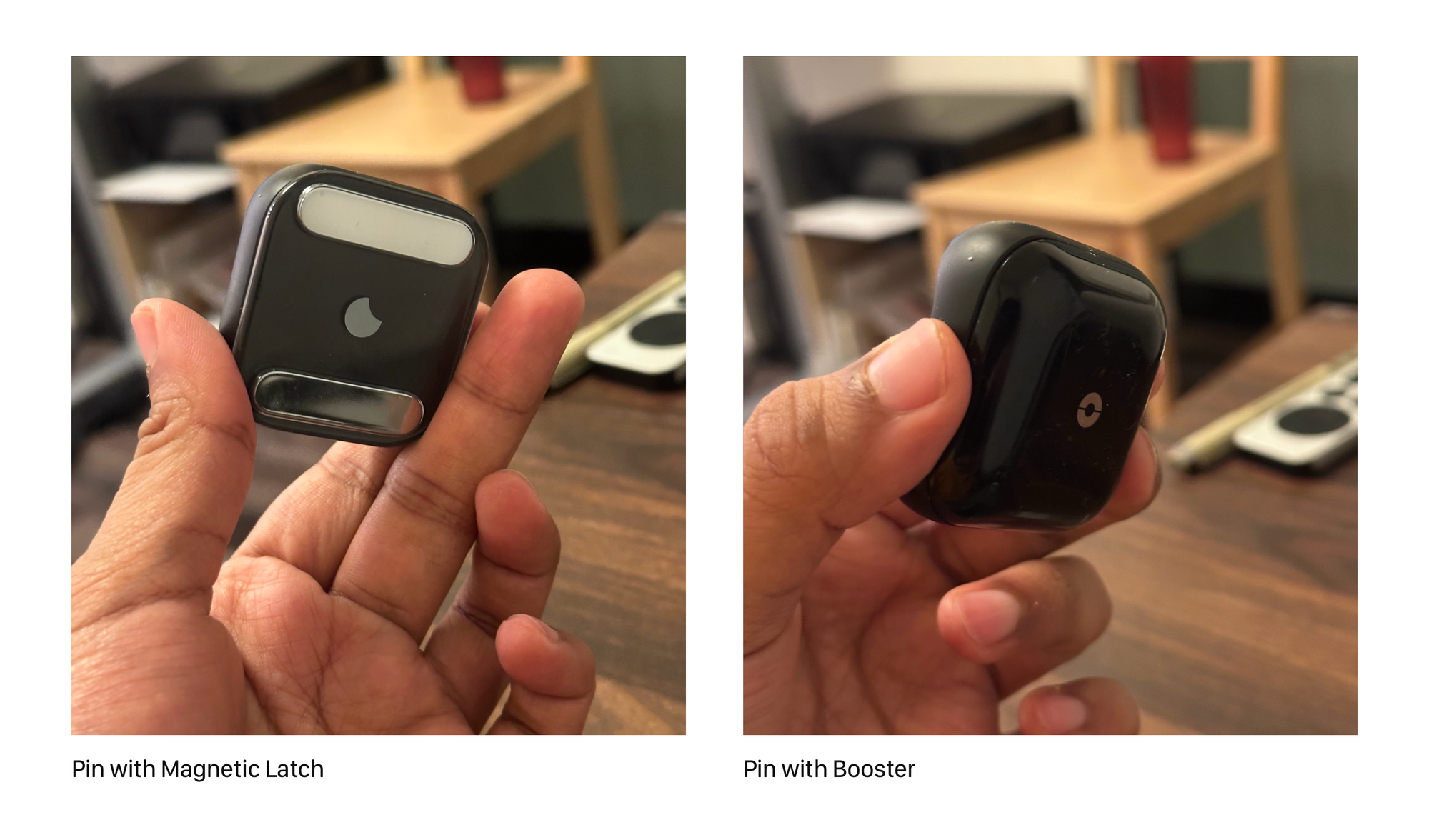

On a side note, I did like that they had a lighter magnetic latch which was more ideal for T-Shirts, and sweaters.

A Prototyper’s vision of a product

At Figma’s conference Config last year, Imran Chaudhri (Humane’s Co-Founder) and their design lead George Kedenburg III took stage to talk about the Ai Pin. The presentation was enlightening for two aspects. First it showed that the people were still trying to position and understand their product. They seemed to think about it as a contextual computer back then. Second it showed that they are a group of “prototypers” who have somehow convinced themselves that their compelling prototypes of AI interactions are going to be a great product too.

At some point in the conversation Kedenburg went on to show the prototype calculator application he willed into existence using code-gen tools. The idea was “apps on the fly”; sure one day, people will be able to just wish apps into existence and that was a decent demonstration of that concept. But then Kedenburg said that this could soon be a product feature in the Ai Pin. Which is where he lost me, the work required to make an app, to maintain it, to ensure it does what the person wants to do, to have a feedback loop to fix bugs and improve performance, all of that along with this novel concept of using LLMs to create an app using natural language, is impossible in a span of six months. The level of delusion just showed how serious they were about following the process to go from a prototype to product.

The gulf between a great product and a great prototype is massive. Prototypes are meant to show how something works, they’re artifacts that accompany an idea to show the idea better. A product on the other hand is more well rounded, it understands the people who would benefit from the artifact, it is designed with them, and it is designed keeping a life-span in mind. It’s also supposed to be very reliable at what it does.

Taken from that lens, the Ai Pin is a very elaborate prototype of all the LLM capabilities today, it can search the web for you, it can organize your ideas, it can generate ‘grocery lists’, ‘todo-lists’ based on your interests, it has a lot of cool micro demos, yet it’s a terrible product. People who pick it up don’t know what to do with it, and when they do, it’s difficult to get it to work reliably.

Despite all the flaws that the Pin had, I still think it’s commendable that a team came up with a vision of computing and executed on it. They shipped something new and unique. That’s an achievement in itself. And to be really honest, I’ll miss the device. It had its quirks and it was bad at a lot of things but there were moments that I really enjoyed using it. There were moments where it was almost magical how it worked.

Do I regret paying $700 and some months of subscription to Humane? Absolutely not. I learnt so much about designing emerging technologies, the social aspects of new artifacts and how technology

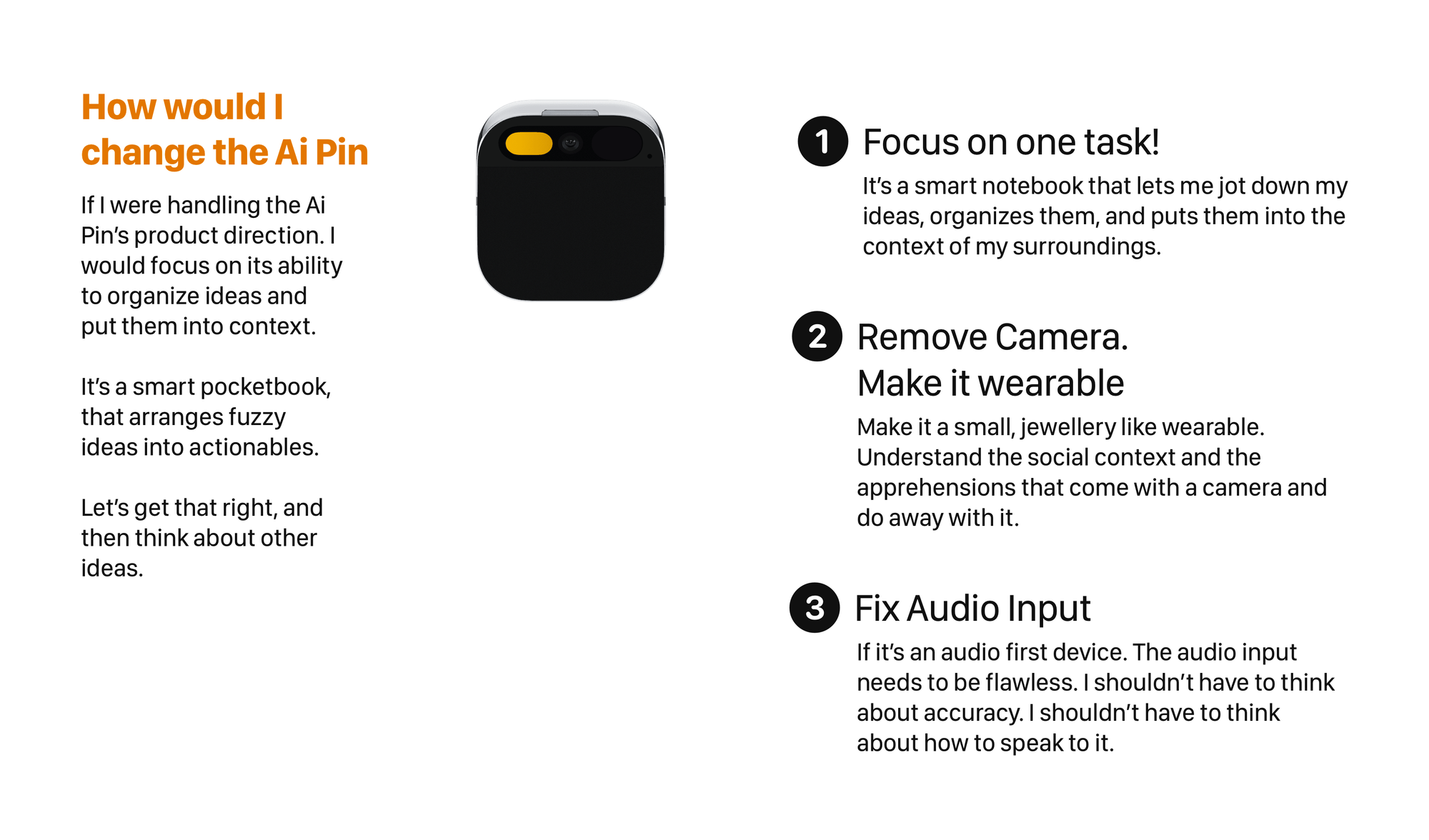

Artifacts don’t exist in a vacuum, they exist in a world and its many systems that encourage the status quo that exists today. Humane wanted to replace the phone but sadly never understood how its product would sit in people’s lives where the smartphone was not a problem to them. It spent so much time dreaming up the interface for the future of human - computer interaction, it lost sight of the problems it wanted to solve. The result was something that lacked clarity, that tried to do too much and yet never reliable at most of the things.